Contact

| Office | A440 |

| Address | Bd de Pérolles 90 1700 Fribourg Switzerland |

| yongjoon.thoo(at)unifr.ch | |

| ORCID | |

| GitHub | |

| Google Scholar | |

Background

- MSc in Robotics, Ecole Polytechnique Fédérale de Lausanne (EPFL)

- BSc in Microengineering, Ecole Polytechnique Fédérale de Lausanne (EPFL)

Yong-Joon Thoo

PhD Candidate in Computer Science, University of Fribourg

MSc in Robotics and BSc in Microengineering, École Polytechnique Fédérale de Lausanne (EPFL)

Research interests

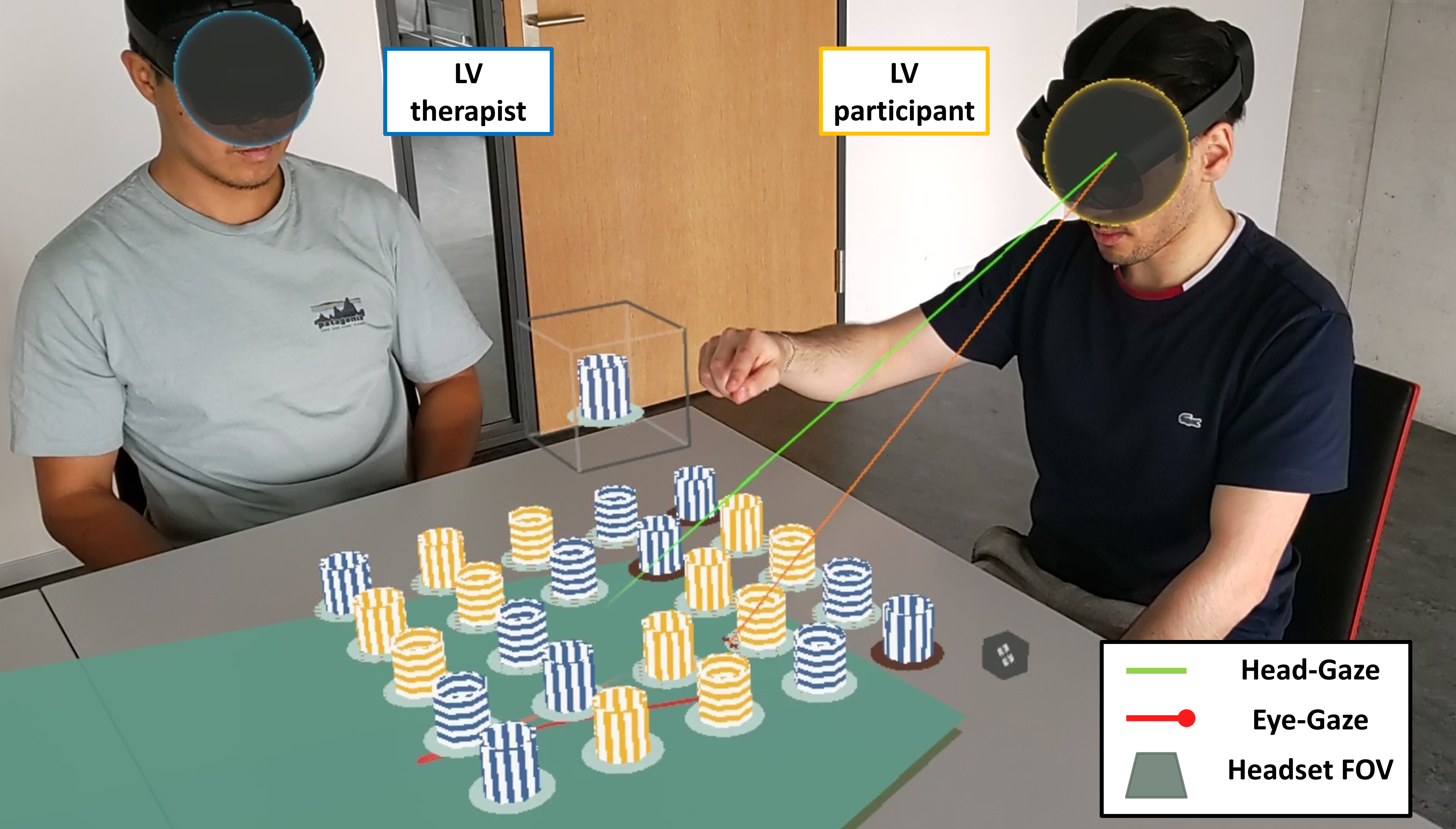

My research focuses on advancing vision rehabilitation for blind and low-vision (BLV) individuals through co-located shared augmented reality (AR) systems. I design interactive training tasks that mirror real-world activities of daily living (ADLs), enabling clients to practice adaptive strategies in familiar environments. In this approach, both clients and low-vision therapists wear AR headsets, creating a shared environment where therapists can directly observe clients’ visual behaviors (e.g., gaze patterns, scanning strategies), provide tailored feedback in real time, and adapt training parameters to better support individual needs. Conducted in collaboration with vision rehabilitation centers, this work aims to foster engagement, improve accessibility, and strengthen the effectiveness of therapist-guided training.

More broadly, I am interested in assistive and rehabilitative systems and have a background in robotics, where I have worked on both mobility support systems (e.g., robotic wheelchairs) and AR/VR-based functional assessment and training tools.

My research interests include:

- Mixed Reality (MR)

- Human-Computer Interaction

- Human-Robot Interaction

- Multimodal Interaction

Publications (* denotes equal contribution)

- (To appear in AlpCHI'26) Yong-Joon Thoo, Karim Aebischer, Nicolas Ruffieux, and Denis Lalanne. 2026. Real-Time Object Detection and Augmented Reality to Support Low-Vision Navigation and Object Localization: A Demonstration.

- Yong-Joon Thoo, Karim Aebischer, Nicolas Ruffieux, and Denis Lalanne. 2025. Enhancing Therapist-Guided Low-Vision Training with Projected Gaze Behaviors in Co-Located Shared AR. In ACM Symposium on Spatial User Interaction (SUI ’25), November 10–11, 2025, Montreal, QC, Canada. ACM, New York, NY, USA, 15 pages. https://doi.org/10.1145/3694907.3765924

- Yong-Joon Thoo, Karim Aebischer, Nicolas Ruffieux, and Denis Lalanne. 2025. Exploring Shared Augmented Reality for Low-Vision Training of Activities of Daily Living. In The 27th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ’25), October 26–29, 2025, Denver, CO, USA. ACM, New York, NY, USA, 19 pages. https://doi.org/10.1145/3663547.3746371

- Maximiliano Jeanneret Medina*, Yong-Joon Thoo*, Cédric Baudet, Jon E. Froehlich, Nicolas Ruffieux, and Denis Lalanne. 2025. Understanding Research Themes and Interactions at Scale within Blind and Low-vision Research in ACM and IEEE. ACM Trans. Access. Comput. 18, 2, Article 7 (June 2025), 46 pages. https://doi.org/10.1145/3726531

- Maximiliano Jeanneret Medina, Yong-Joon Thoo, and Cédric Baudet. 2024. "3.1 Accessibilité numérique: évolution et état actuel". Innovation Booster Technologie et Handicap : Un dispositif, des personnes engagées et des projets pour une innovation sociale par la science (p. 157-169). Éditions Sociographe. https://doi.org/10.3917/agraph.nenc.2024.01.0157

- Yong-Joon Thoo*, Maximiliano Jeanneret Medina*, Jon E. Froehlich, Nicolas Ruffieux, and Denis Lalanne. 2023. A Large-Scale Mixed-Methods Analysis of Blind and Low-vision Research in ACM and IEEE. In The 25th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS '23), October 22–25, 2023, New York, NY, USA. ACM, New York, NY, USA, 20 pages. 10.1145/3597638.3608412

- Yong-Joon Thoo, Jérémy Maceiras, Philip Abbet, Mattia Racca, Hakan Girgin and Sylvain Calinon. 2021. Online and Offline Robot Programming via Augmented Reality Workspaces. 10.48550/arXiv.2107.01884

Teaching

Current courses

Past courses

- HCI Seminar [Spring 2025], Teaching Assistant

- Multimodal User Interfaces [Spring 2025], Teaching Assistant

- HCI Seminar [Spring 2022, Autumn 2023], Teaching Assistant

- Multimodal User Interfaces [Spring 2022, Spring 2023, Spring 2024], Teaching Assistant

- Prototyping and Fabrication in the FabLab [Autumn 2022, Autumn 2023], Teaching Assistant

MSc / BSc Theses Supervision

Ongoing

- Benjamin Del Don, Integrating YOLO ORB SLAM via an Intel Realsense on a Mobile Robot to Detect Indoor Objects for Blind and Low-Vision People [BSc]

Completed

- Kimmy Costa, Investigating the Advantages of Haptic Feedback during Low-Vision Rehabilition [MSc, 2024]

- Morgane Kappeler, Investigating the possibilities of eye gaze visualizations in on-site AR collaboration game [BSc, 2024]

- Sophie Maudonnet, An Augmented Reality based Sandbox to Investigate the Potential of On-Site Collaborative Tasks [BSc, 2024]

- Mikkeline Elleby, Collaborative Augmented Reality Platform: A Feasibility Study [BSc, 2023]

- Loris Bertschi, Co-design of an AR Rehabilitation Task for People with Visual Impairment [BSc, 2023]

- Cédric Membrez, AR Escape Room to Support the Rehabilitation of People with Visual Impairment [MSc, 2023]

- Karim Aebischer, A Gamified Mobile Application for Approach-Avoidance Tasks [MSc, 2022]

Events

Conferences

- (Presenter) at the ACM Symposium on Spatial User Interaction (SUI 2025), where I presented my paper entitled "Enhancing Therapist-Guided Low-Vision Training with Projected Gaze Behaviors in Co-Located Shared AR."

- (Presenter) at the 27th International ACM SIGACCESS Conference on Computers and Acessibility (ASSETS 2025), where I presented my paper entitled "Exploring Shared Augmented Reality for Low-Vision Training of Activities of Daily Living."

- (Presenter) at the 25th International ACM SIGACCESS Conference on Computers and Acessibility (ASSETS 2023), where I presented my paper entitled "A Large-Scale Mixed-Methods Analysis of Blind and Low-vision Research in ACM and IEEE."

Workshops

- (Co-organiser) of the MISTI MIT Media Lab x Smart Living Lab Workshop - Part 2 hosted at the MIT Media Lab in Cambridge, Massachussets, USA.

- (Co-organiser) of the MISTI MIT Media Lab x Smart Living Lab Workshop - Part 1 hosted at the Human-IST Institute in Fribourg, Switzerland.